I'm not going to try and do an IOS - JunOS conversion guide, but just save my list of useful commands after spending a couple of months installing a ton of Juniper EX switches. I couldn't if I tried anyhow as most of the switches I touch these days are Dell OS10, Mellanox Onyx, or NX-OS...Not a lot of IOS there.

Every vendor has a way to configure multiple switches to be able to support MLAGs - LACP channels across two or more switches, it varies as to whether 'stacking' - where the switches then have a single conjoined control plain is required - which leads to issues when upgrade time comes. Juniper does require Virtual Chassis for this, Dell VLT / Mellanox / Cisco VPC are slightly more distant so you still have that control plane separation which is nice. In some environments I've managed to keep a pair of Juniper EX separate because ESXi / Cohesity / Pure could all support redundancy without LACP, which is preferable IMHO.

Juniper virtual chassis is straightforward, turn LLDP on and connect switches together (over 40 or 100G only), if they're the same type they try to do it for you. If they're different types of switch you may need to manually configure mixed-mode which requires a reboot. Some switches don't have the 40/100G ports set as vc-port out of the box, that's simply request virtual-chassis vc-port set ...

Upgrades may not be quite to straightforward- my preference is to update individual switches to a sensible release when they're fresh out of the box then leave well alone. Haven't yet been trying any features new enough for this to cause an issue. On the bench a USB key is the easiest way to get an image on, once configured putting images on a Linux VM running Ngnix is easiest - can then install the image direct from the http URL rather than fighting to copy into into /var/tmp first. Frequently this fails for no good reason. If virtual chassis pairs are not in production you can install the image on all of them then power cycle so they all come up with the new image, I've failed to do it more gracefully as the virtual-chassis doesn't re-establish once they're on different versions so then you can't reboot the others - unless you have OOB access of course.

My biggest culture shock was seeing the same physical ports listed in the configuration repeatedly as ge-0/0/1 / xe-0/0/1 / et-0/0/1 and having to ensure you put configuration under the right one according to what speed you're going to utilize the interface at. I installed several pairs of EX4650 in a short space of time with each install smoother than the last.

Plan carefully as you can only set port speed per group of 4 ports, which can be annoying if after your neat cabling job something doesn't come up at the speed you expect. (or someone else patches a couple of 10g only devices to the middle of a bunch of ports set to 25 so someone has to drive to the DC and move them)

set chassis fpc 0 pic 0 port 20 speed 25g

One thing that bit me is the supported optics/DAC list is not quite the same between images/switch types, so having tested parts between one pair of switches on the bench, I was losing my mind when those same parts didn't work in the DC...(I've generally had issues with 25g that I don't with 10/40/100, lots of picky devices, worst offender being Intel 25g NICs that want Intel cables - which I couldn't find available to purchase)

'show configuration | display set' - view the configuration as set commands rather than XML

'show | compare' - see what pending changes you have made in edit mode

'rollback 0' - throw away those changes if you don't like what you see

'show interfaces diagnostics optics' - valuable when doing the L1 hard part, laboriously chasing down why all your fiber links are not up. Even a cheap and cheerful light meter from Techtools or Amazon is invaluable here too.

'show chassis pic pic-slot 0 fpc-slot 0' and 'show chassis hardware' are useful here to for listing out the optics/DACs present in the system.

Run has a similar function to do in Cisco land, so you can execute an operational mode command from within edit (config) mode.

'monitor start messages' = term mon

To put an IP address on a VLAN, you create an IRB interface:

set interfaces irb unit 123 family inet address 192.168.1.1/24

set vlans newvlan vlan-id 123

set vlans newvlan l3-interface irb.123

To create an access port:

set interfaces ge-0/0/5 unit 0 family ethernet-switching vlan-member newvlan

To create a trunk port:

set interfaces ge-0/0/6 unit 0 family ethernet-switching interface-mode trunk

set interfaces ge-0/0/6 unit 0 family ethernet-switching vlan-member oldvlan

set interfaces ge-0/0/6 unit 0 family ethernet-switching vlan-member newvlan

To add a default route:

set routing-options static route 0.0.0.0/0 next-hop 172.16.1.1

LAG / MLAG / Port channels:

set chassis aggregated-devices ethernet device-count 1 (Or however many LAGs you need total)

set interfaces ae0 aggregated-ether-options lacp active

set interfaces ae0 unit 0 family ethernet-switching interface-mode trunk

set interfaces ae0 unit 0 family ethernet-switching vlan members oldvlan

set interfaces ae0 unit 0 family ethernet-switching vlan members newvlan

set interfaces ge-0/0/0 ether-options 802.3ad ae0

set interfaces ge-0/0/1 ether-options 802.3ad ae0

Note that the access class switches and datacenter class (4500+) have different defaults. Where the access switches have RSTP on by default DC switches do not, I 'set protocols rstp interface xe-0/0/47' just on ports that I know will be up/down links to other switches.

Recovering an old SRX300 with an unknown password

- that was also configured to ignore the config reset button.

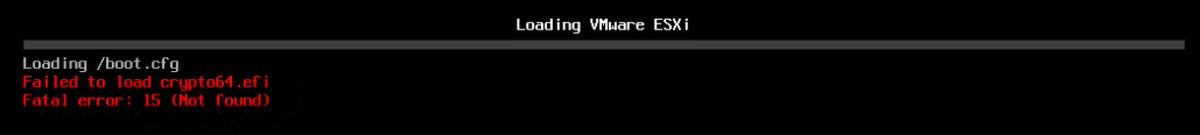

Find an image and put it on a small FAT32 USB stick (a 1G worked for me while an 8 did not (got 'cannot open package (error 22)'). Insert the stick and boot, ignore the first prompt asking if you want to interrupt the boot process, but press space on the second. Then at (notice triple slash)

loader> install file:///junos-srxsme-10.4R3.11.tgz

Takes a long time, as it reformats the internal USB file system, but result is a new blank system, as if the reset config button still worked. Also needed to 'delete system phone-home' and 'delete system autoinstallation' before web access works. To permit SSH from untrusted:

set security zones security-zone untrust interfaces ge-0/0/0.0 host-inbound-traffic system-services ssh