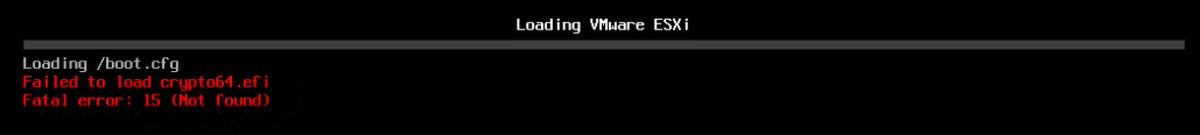

So I broke one of my ESXi hosts by installing 7.0U2 as a patch baseline instead of an upgrade baseline in Lifecycle Manager (formerly Update Manager). Failed to boot getting stuck at 'loading crypto...'

Easy fix supposedly, boot from CD and upgrade install over the top of the existing install, boot right back into the cluster.

Forums abounded with other people hitting the same thing and using iDRAC, iLO etc to mount the image and recover, I tried to do the same with Supermicro IMPI. That's how I installed 6.7 on these in first place so I knew I had the capability to mount an ISO from an SMB share. However install was at home where I was mounting the images off a Synology. I dimly remembered having to mess with Synology but couldn't remember just how.

After wasting a long time trying to mount the ISO of a Windows box I gave up and installed a fresh Ubuntu VM to use, figuring correctly that Samba logging would help me figure it out. Supermicro's SMB client not only speaks only SMB 1, 'server min protocol = NT1' but also doesn't support any decent authentication methods. So after also adding 'ntlm auth = yes' the mount worked and I could recover. The Samba VM got 150 random SMB hits from the Internet during its brief lifespan too, though all either zero length log files or ones filled with auth failures. (My IPMI ports are out on the internet but with ACLs to limit access to just some static IPs I have access to, I'm not completely crazy)

[global]

server min protocol = NT1

ntlm auth = yes

[shared]

path = /home/simon/shared

valid users = simon

read only = no

To get IPMI settings of local system from ESXi:

localcli hardware ipmi bmc get

esxcli hardware ipmi bmc get